Active projects help ideas stick; students learn more when they build and test concepts instead of just reading. What if AI activities for high school students could turn curiosity about chatbots, coding, machine learning projects, computer vision labs, and ethics into short lesson plans students actually enjoy? Use these ideas to easily engage high school students in hands-on, fun, and inspiring AI activities that spark curiosity, build practical skills, and make learning AI exciting and relevant.

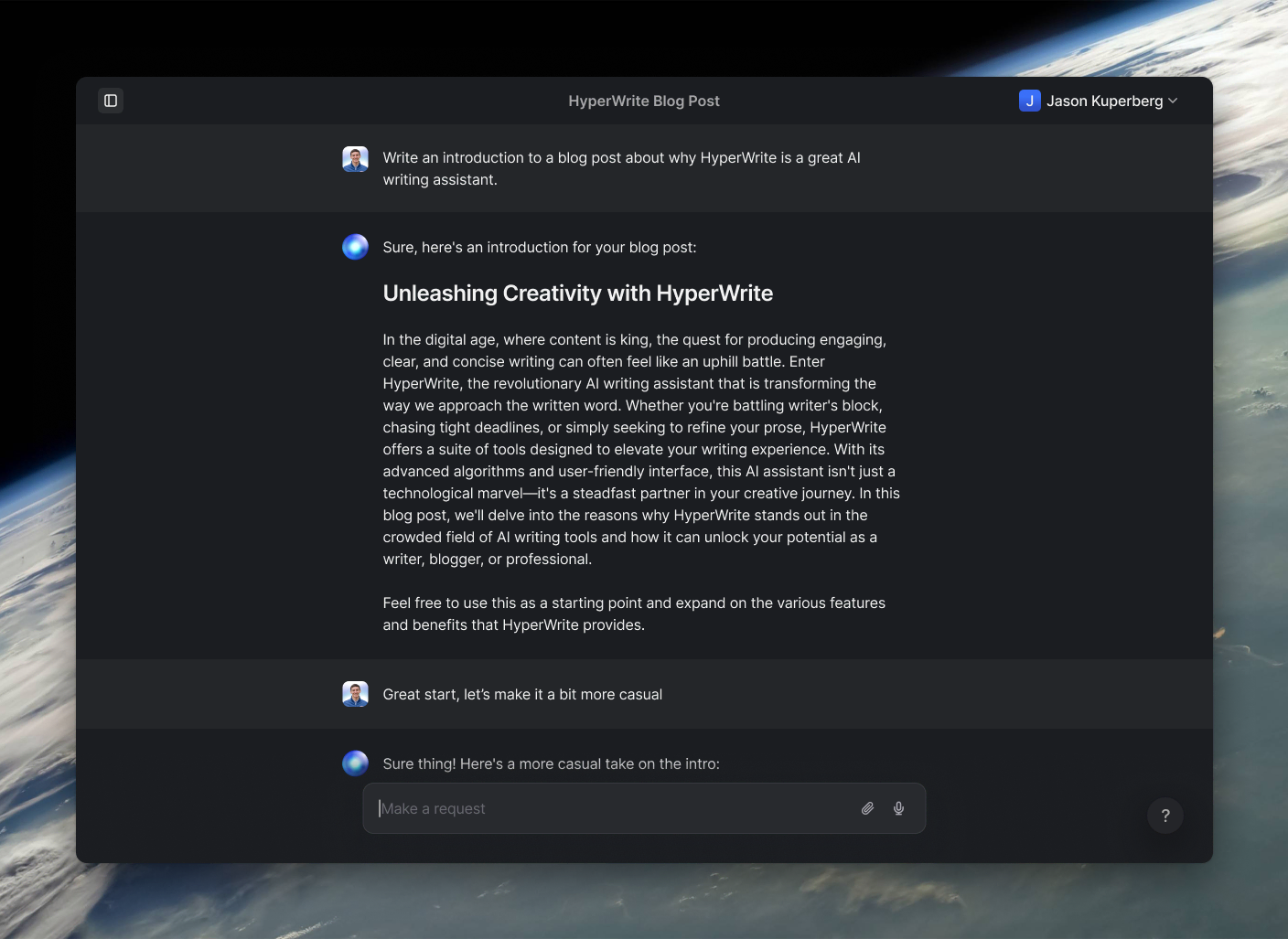

To help with that, HyperWrite's AI writing assistant turns your ideas into clear lesson plans, Study Tips for College, student prompts, worksheets, project outlines, and assessment rubrics you can adapt for Python, Scratch, or simple data science labs. It saves prep time and helps you match activities to different skill levels so students stay engaged and leave with fundamental skills.

Summary

- Hands-on AI activities convert abstract concepts into practical skills, with 85% of students reporting that AI tools significantly improved their learning efficiency according to Zendy's 2025 survey.

- Embedding process requirements, such as annotated sources and draft reflections, prevents the superficial use of AI, a practice supported by 70% of educators who believe AI activities are crucial for developing critical thinking skills.

- A practical curriculum can be built from a diverse menu, the article lists 22 hands-on AI projects that span coding, data science, ethics, and creative work to meet different interests and skill levels.

- Widespread tool adoption means assessment design matters, since over 50% of teachers have integrated AI into high school curricula and poor assessment routines will scale predictable failure modes.

- Student engagement rises when activities require evidence of process, with 75% of high school students reporting increased engagement through AI activities when reflections and citations are enforced.

- Prioritizing transfer over neat outputs and requiring three tasks per activity, generating critique and justification, keeps assignments measurable as complexity increases and preserves evaluative skill.

- HyperWrite's AI writing assistant addresses this by transforming activity ideas into clear lesson plans, student prompts, worksheets, project outlines, and citation-backed assessment rubrics, helping teachers maintain consistent process artifacts.

Why AI Activities are Essential for Student Learning

AI is already woven into daily life and work, and hands-on AI activities make that valuable reality for students by converting abstract ideas into practical skills they can practice and explain. These activities foster critical thinking, problem-solving, and digital literacy by requiring students to:

- Test assumptions

- Justify sources

- Iterate on real-world outputs, rather than merely memorizing definitions.

Why Does Building with AI Make Abstract Ideas Tangible?

When students prototype prompts, tune parameters, or evaluate model outputs, they move from passive reception to active experimentation. That shift feels like moving from reading sheet music to playing an instrument: the rules matter only when you try to perform. That matters in practice, which is why Zendy’s 2025 survey shows that 85% of students report that AI tools have significantly improved their learning efficiency.

In classrooms where projects require students to cite sources and annotate AI-generated drafts, I've seen pattern-based results: learners form small study groups, challenge model claims, and treat mistakes as data to debug rather than as failure.

Can AI Activities Replace Teachers or Traditional Methods?

No, they augment them. The familiar approach remains direct instruction and teacher-led feedback, as it provides structure and accountability. That structure breaks down when tools are used without scaffolds, which is why many teachers get exhausted by students relying on AI for notes or homework, creating a gap between submitted work and demonstrated learning.

Process Requirements for AI-Based Work

The practical fix is to embed process requirements, including requiring drafts with highlighted sources, reflective notes on what the AI got wrong, and short in-class demonstrations of problem-solving. That pattern aligns with Zendy’s 2025 findings, which report that 70% of educators consider AI-based activities essential for building students’ critical thinking skills.

When students are required to produce process artifacts, AI functions as a scaffold that reinforces assessment rather than weakening it.

What Platforms Engage Students?

Duolingo for language practice, DreamBox for adaptive math, and Kahoot for lively checks work because they combine instant feedback with clear goals and short cycles of effort. The familiar workaround is cobbling multiple apps and spreadsheets to track progress. That approach is comfortable, but as assignments and stakeholders multiply, context fragments and teachers spend disproportionate time stitching evidence together.

Centralized Research and Rigor

Platforms like HyperWrite offer a different approach, centralizing citation-backed research, task-specific templates, and personalized completions, allowing teachers to set project constraints and then review consistent artifacts, thereby reducing the time spent chasing sources while maintaining assessment rigor.

How Do We Keep AI Activities Inclusive and Accountable?

This is where design choices matter. If an activity hands students a finished product, engagement tends to collapse. If it asks them to explain the model’s reasoning, test edge cases, and submit annotated revisions, learning deepens. For neurodivergent students, that means designing multi-step tasks with:

- Checkpoints

- Clear rubrics

- Options for oral or visual evidence of understanding

AI as Training Wheels for Competence

That pattern appears consistently in classrooms and clubs: when we require students to produce short process logs and peer reviews, the tools become training wheels for competence rather than a bypass for it. Practically, use adaptive prompts, time-boxed reflections, and peer-teaching moments so the AI amplifies student thinking instead of masking it.

How Should Teachers Design One Effective AI Activity?

If you need a single rule, prioritize transfer over output. Activities should force students to do three things: generate, critique, and justify. Start with a tight prompt, require a one-paragraph critique that points out two factual or logical errors, and finish with a short annotated bibliography.

That constraint keeps the task measurable as complexity rises, and it preserves the evaluative skill teachers care about. Think of AI tools like a power tool in a woodshop, useful only when students learn safe technique and inspection, not as a replacement for apprenticeship.

What Typically Breaks When Schools Adopt AI Poorly?

The failure mode is predictable: teachers adopt tools for convenience, assessment criteria remain the same, and students submit superficially polished work that reveals little about their learning. The result is frustration on both sides, with teachers doubting the validity of assessments and students feeling rewarded for surface polish.

Procedural Correction and Assessment

The correction is procedural. Build assessments around process artifacts, utilize citation checks, and incorporate live demonstrations where students must explain their decisions in real-time. That simple shift in design changes everything about accountability and skill development, and it opens a clear path to classroom projects that are both efficient and honest.

The next part reveals what happens when you turn that design into actual classroom activities, and the outcome is more surprising than most teachers expect.

Related Reading

- Study Tips for College

- When Is the Best Time to Study for a Test

- What Is the Hardest Thing to Study in College

- Can AI Solve Math Problems

- How to Study in Med School

- Types of Study Methods

- How Long Should You Study for a Test

- How to Study for a Math Test

- Study Tips for High School

- Study Tips for Middle School

- Study Strategies for High School Students

22 Interesting AI Activities for High School Students

Use AI as a creative lab: try different roles, formats, and constraints so students learn by doing, not by passively receiving answers.

1. The Great Debate

- Purpose: Build argumentation, critical thinking, and public speaking confidence.

- Skills developed: Sourcing evidence, rebuttal framing, rhetorical clarity, adaptability under pressure.

- Tools/Materials: chatbot (ChatGPT, Gemini), curated source packets, rubric for evidence and persuasion.

- Example: Have students research a historical figure, adopt that persona, and run a simulated debate with an AI opponent using a base prompt such as, “You are Alexander Hamilton and I am Thomas Jefferson. Let’s debate the role of the federal government. You open.”

- Best practices: Require students to submit the debate transcript with two annotated source citations and a 200-word reflection on what the AI misunderstood or where their argument improved.

2. Story Collaborator

- Purpose: Spark narrative starts and teaches iterative revision.

- Skills developed: Voice development, plot shaping, scene-level pacing.

- Tools/Materials: Writing editor, prompt templates, versioned drafts.

- Example: Students request an opening line, write two pages, then ask the AI for a plot twist and incorporate it.

- Best practices: Insist the student’s draft remains the primary text, and require a margin note indicating which sentences came from the model and why they accepted or changed them.

3. Mock Career Interview

- Purpose: Build interview instincts, professional vocabulary, and reflection on career fit.

- Skills developed: situational answers, industry terminology, feedback incorporation.

- Tools/materials: Role-play prompts, voice-enabled chat, interview rubric.

- Example: “Act as a hospital administrator interviewing a candidate for a nursing assistant internship.”

- Best practices: Record sessions, ask the AI to rate answers on clarity and specificity, and require the student to produce an improvement plan with two concrete actions.

4. Study Buddy

- Purpose: Provide focused retrieval practice and targeted explanations without giving away answers.

- Skills developed: Metacognition, spaced recall, question formulation.

- Tools/materials: Teacher-configured chatbot that provides hints only, question banks, self-check sheets.

- Example: Student asks for multiple-choice practice on thermodynamics, then explains errors to the bot.

- Best practices: Use teacher-set guardrails so the bot gives nudges, not answers, and require a one-paragraph reflection on misconceptions found.

5. Call-In Radio Show

- Purpose: Synthesize multiple sources into a concise explainer, and practice critical questioning.

- Skills developed: summarization, source evaluation, live questioning.

- Tools/materials: NotebookLM or similar for source ingestion, audio player, question tracker.

- Example: Upload a set of articles on a historical event and generate a 6-minute host script students can fact-check.

- Best practices: Have students submit three genuine follow-up questions and one source they would have added.

6. Rock, Paper, Scissors Game

- Purpose: Teach basic programming logic and user input/output.

- Skills developed: Conditionals, randomness, debugging.

- Tools/materials: Python or Java IDE, sample code templates.

- Example: Students build a CLI or GUI version where the AI picks moves via random number generation.

- Best practices: Ask students to add logging to analyze patterns and write a short note on predictability over time.

7. Image Classifier

- Purpose: Learn dataset creation, labeling, and model evaluation.

- Skills developed: Data curation, training-validation splits, confusion matrix interpretation.

- Tools/materials: Image datasets, TensorFlow or similar, labeled folders.

- Example: Classify photos into animals versus vehicles and report precision/recall.

- Best practices: Enforce small, balanced datasets and require a one-page analysis of failure cases.

8. Pong Game

- Purpose: Combine simple game development with adaptive opponent logic.

- Skills developed: Event loops, simple reinforcement ideas, difficulty scaling.

- Tools/materials: Python with Pygame or JavaScript with canvas.

- Example: Implement a paddle that adjusts speed based on recent player hits.

- Best practices: Log difficulty adjustments and ask students to explain at which skill bands the opponent fails to adapt.

9. Simple Chatbot

- Purpose: Teach basic NLP concepts and response flow design.

- Skills developed: Tokenization, intent mapping, response templates.

- Tools/Materials: Python, NLTK or spaCy, simple rule sets.

- Example: A homework-help bot that asks clarifying questions before offering hints.

- Best practices: Require a conversation map and a test suite of 20 user inputs showing expected vs actual responses.

10. Email Spam Filter

- Purpose: Apply supervised learning to a real-world signal problem.

- Skills developed: feature extraction, model selection, handling imbalanced data.

- Tools/materials: labeled email dataset, Naive Bayes or simple neural network libraries.

- Example: Train a filter and measure false positive rate on a holdout set.

- Best practices: Make students present a mitigation plan for false positives and explain tradeoffs.

11. Unbeatable Tic-Tac-Toe

- Purpose: Combine GUI work with algorithmic thinking.

- Skills developed: Minimax logic, GUI integration, state evaluation.

- Tools/materials: Python with Tkinter, game engine scaffolding.

- Example: Build an opponent that never loses and log decisions.

- Best practices: Require an explanation of one critical branch where the AI forces a draw.

The Drag of Fragmented Feedback

At this point, most classes manage drafts, source lists, and feedback through a mix of folders and comment threads because it is familiar and requires no new tools. As projects scale, those threads fragment and teachers spend time reconciling versions, chasing citations, and re-teaching source-use.

Solutions like HyperWrite centralize writing, citation-backed research, and template completions so teachers can set constraints, review consistent artifacts, and cut time spent hunting sources while preserving assessment rigor.

12. Dungeon Master

- Purpose: Build interactive fiction driven by AI narration.

- Skills developed: Narrative design, prompt engineering, state tracking.

- Tools/materials: Python or Node, prompt templates, story-state files.

- Example: Players navigate a branching dungeon where the AI describes consequences and puzzles.

- Best practices: Log player choices and require a map showing branching points students coded.

13. Virtual Pet

- Purpose: Teach stateful systems and simple reinforcement learning.

- Skills developed: User modeling, reward signals, UI design.

- Tools/Materials: Tkinter for GUI, small state machine codebase.

- Example: Pet learns to prefer certain actions after repeated rewards.

- Best practices: Limit complexity, require a document explaining the reward design and failure modes.

14. Chess

- Purpose: Implement classic search algorithms and performance tuning.

- Skills developed: minimax with pruning, evaluation heuristics, performance profiling.

- Tools/Materials: Python, chess libraries, testing suites.

- Example: Build a competitive engine and profile move selection time at various depths.

- Best practices: Require a performance report showing memory and time tradeoffs for a chosen depth.

15. Recommended Reading System

- Purpose: Build a recommendation engine using content or collaborative filtering.

Skills developed: Similarity metrics, sparse-matrix handling, evaluation metrics like MAP. - Tools/Materials: Sample user logs, small article corpus, Python libraries.

- Example: Recommend three articles based on a short preference survey and measure click-through in a pilot.

- Best practices: Have students document cold-start strategies and dataset limitations.

16. Participate in an AI Hackathon

- Purpose: Teach rapid prototyping, collaboration, and time management.

- Skills developed: pitchcraft, MVP scoping, version control.

- Tools/materials: Hackathon rules, team roles, basic cloud or local deployment.

- Example: 48-hour challenge to build an accessibility tool that summarizes lectures.

- Best practices: Debrief on decisions that were cut and which prototypes survived user testing.

17. Analyzing Social Media’s Influence on Stock Markets

- Purpose: Combine NLP with financial data to test hypothesis-driven questions.

- Skills developed: Sentiment analysis, time-series correlation, ethical scraping practices.

- Tools/Materials: Tweepy or Reddit API, Yahoo Finance, NLTK, visualization.

- Example: Correlate sentiment spikes on selected forums with intraday price moves and report confidence intervals.

- Best practices: Emphasize sampling bias, avoid overclaiming causation, and require a replication plan.

18. Homework and Time Management App

- Purpose: Build a usable app that suggests study plans based on behavior.

- Skills developed: UX, backend integration, basic predictive modeling.

- Tools/Materials: Flask or Django, calendar APIs, simple ML models.

- Example: App predicts likely completion time and suggests an optimized study block.

- Best practices: Log user overrides and use them to refine models rather than discard them.

19. Detecting Bias in Machine Learning Models

- Purpose: Teach auditing techniques and the social impact of datasets.

- Skills developed: Dataset stratification, fairness metrics, explainability tools like LIME.

- Tools/Materials: FairFace or UTKFace dataset, model training code, explainability libraries.

- Example: Reproduce an image-cropping bias test and analyze demographic splits in outputs.

- Best practices: Frame tests as investigations, require a remediation proposal and an evaluation plan.

20. Creative Art Generation with GANs

- Purpose: Explore generative models and artistic iteration.

- Skills developed: GAN architecture basics, dataset curation, style blending.

- Tools/materials: TensorFlow or PyTorch, curated image sets, compute resources.

- Example: Train a GAN to blend Renaissance portraits with street photography and present a gallery.

- Best practices: Discuss copyright and dataset provenance, and present before-and-after noise-control experiments.

21. AI-Powered Music Composition

- Purpose: Use models to extend motifs and explore genre conventions.

- Skills developed: Sequence modeling, MIDI handling, emotion-to-parameter mapping.

- Tools/Materials: Magenta Studio, MIDI keyboard, DAW for rendering.

- Example: Feed a two-bar motif and ask the model to produce four continuations in different moods.

- Best practices: Require students to annotate which generated bars they kept and why.

22. Scriptwriting with AI Collaboration

- Purpose: Co-create dialogue and scene structure while preserving authorial voice.

- Skills developed: Character consistency, pacing, revision management.

- Tools/materials: Script templates, character bibles, version control.

- Example: Students supply character outlines and iterate on three alternate opening scenes generated by AI.

- Best practices: Require a commentary that compares tones across iterations and explains final choices.

Process Artifacts for Learning Gains

Classroom mechanics that help these activities stick, especially in larger courses, include process artifacts such as versioned drafts, short reflections, and annotated source lists. When students must show their work, the AI becomes a training tool, not a shortcut. The pattern we observe is straightforward: practice combined with evidence yields measurable learning gains, not polished bypasses.

Increased Engagement and Integration

The uptake in classrooms is visible: 75% of high school students reported increased engagement in learning through AI activities. AI engagement statistics. Teachers are also responding, with over 50% integrating AI tools into their high school curricula. AI adoption in education

Reasoning Over Polish

What most surprises teachers is how quickly students move from skepticism to ownership once you require explanations and citations for AI outputs; the work becomes about reasoning, not polish. That’s where the next step matters most; what we try next will reveal whether these tools become training wheels or a crutch.

Related Reading

- How Many Hours Do College Students Study per Week

- Good Study Habits for College

- Study Habits for High School

- How to Study the Night Before an Exam

- How to Study for Finals in High School

- Different Study Techniques

- How to Study for a Final Exam

- How to Create a Study Schedule

- Scientifically Proven Study Methods

Try our AI Writing Assistant to Write Natural-sounding Content

When we piloted HyperWrite in classrooms over the course of a semester, a pattern emerged: students routinely challenged generic AI outputs until the system adapted, asking clarifying questions and aligning more closely with their individual voice.

This aligns with broader findings, as the Natural Write User Survey reports that 85% of users experienced improved writing quality after using an AI writing assistant, and the Natural Write Efficiency Study reveals that users saved an average of 30 minutes per article.

Related Reading

- Best Study Tools for College Students

- Best Light Color for Studying

- Best AI Websites for Students

- Best AI to Solve Math Problems

- Best AI for Exams

- Best Study Methods

- Best Way to Study for a Test

- Best Study Apps for College Students

Powerful writing in seconds

Improve your existing writing or create high-quality content in seconds. From catchy headlines to persuasive emails, our tools are tailored to your unique needs.